Seven years ago, I had just graduated from university and moved to Ulm to take a job at BMW Car IT to develop infotainment software for the (BMW) “cars of the future.” Last October, after four positions across three different teams, I have decided to move on. Time to recap.

Originally, I wanted to cover my entire career at BMW in this blog post. The first two and a half years would already fill a 2500 word essay, though, so I’ve decided to split these posts into a series of shorter posts. This first post covers my day-to-day work for the first two years in the software integration team.

A shift in how the automotive industry builds software

I joined right at the beginning of the development cycle for what would be the first infotainment system partly developed by BMW. The hardware part supplier—the so-called “Tier 1”—used to be fully responsible for development of the software of previous head unit systems, except for the BMW-themed user interface. BMW wanted to change this with the software targeting cars released in 2018. We can see the same transition taking place in other auto manufacturers, too: Daimler has Daimler TSS, which are now called “Mercedes-Benz Tech Innovation;” Audi founded e.solutions; Volkswagen founded CARIAD SE in 2020; Tesla has always been doing their software in-house.

Two major reasons motivated this change. First, developing the software from scratch is becoming both too complicated and too expensive due to the size and feature set of modern infotainment systems. Second, the auto manufacturers now consider their infotainment and user interface part of their value proposition and an avenue to new revenue streams. Everybody wants to be the best in connectivity, offer the smoothest integrations with music and video streaming services, or just ship the funniest easter-eggs. Most companies in this space are concerned that ignoring the software is going to lose them customer experience to the tech giants—considering Android Auto’s success, not an unreasonable assumption.

Take some 250 Linux people, put them together, get infotainment in return

BMW needed its own infotainment system. To do this, they had hired a sizable team of young and eager Linux enthusiasts, a significant chunk of which with prior experience from Nokia before they closed their site in Ulm. About 200 people in Ulm plus another 60-70 in Munich worked for BMW Car IT GmbH, the Munich alternative to Daimler TSS, CARIAD, and e.solutions. Most of my colleagues were Linux and open source enthusiasts, and that did show both in the pleasant atmosphere as well as in the final product. If you’ve used a recent desktop Linux, you’d probably find the root shell of a BMW MGU infotainment system pretty familiar. You’d encounter systemd, systemd journal, wayland, glibc, and a pretty normal GNU userland – that is, except for bash and coreutils, of course, because it wouldn’t be an embedded system with those, would it? I’ll leave the discussion of whether a quadcore CPU, 6 GiB RAM system would ever reasonably constitute “embedded” as an exercise to the reader.

Learning to develop a car infotainment system based on Linux was an interesting challenge. There was a bit of a rivalry between the Tier 1 and its developers and the revolutionary open source group in Ulm: they were often trying to do things the way they had been done before, while we were trying to question everything and modernize both the product and developer experience plus apply best practices—running various services as root, for example, was just no longer acceptable for a product in 2018.

“The build failed again”

Right from the start, we wanted compiling and automated testing handled by a continuous integration system. Fast iterations, between two and three potentially shippable releases a day, and a system defined from a single git commit hash were best practice in 2014, and we wouldn’t settle for less.

The balance between making sure the current development state is well tested enough to deliver and development speed was not easy to find. Possibly the biggest lesson I learned is that test stability matters, a lot. A single bug that fails the build or a test that toggles just 5 % of the time can ruin an otherwise flawless multi-hour build and test run, and unless you have a strategy to deal with test instability and recover (such as re-running these tests, manually waiving them after inspection, or disabling them), your developers are going to be both annoyed, and waste a lot of time. Working hours spent on identifying and fixing such instabilities reach a positive return on investment fast.

One thing I think we did get right back then was serializing merge requests, testing them in-order and merging them when they pass testing. This technique is now becoming more widespread among continuous integration systems. The Zuul CI system, for example, calls this approach project gating, and GitLab CI started supporting similar concepts. Using gating did prevent cases where two conflicting changes would work separately, but together would have broken the mainline, which in turn would have wasted hours of developers waiting for fixes.

All mail clien^W^Wbuild tools suck, this one just sucks less

To build the rougly 3550 components of the infotainment system, we needed a tool that would allow us to compile and assemble a Linux distribution. The system of choice for us was the yocto build-your-own-distribution project with its build system bitbake.

It worked reasonably well, but I’d be lying if I said there weren’t a significant number of pitfalls to be aware of and knobs we had to adjust to keep performance from plummeting. Most of us developed a love-hate relationship with its mixture of shell scripting and Python code in the same file, and its wonderfully simple to debug caching system. In hindsight, the system whose limitations you know is probably a better choice over a system where you don’t know how it’s going to fail. Or, as a colleague used to put it, when he switched to a team that uses Android: bitbake is the worst build system, except for all the others.

“The build failed again” again

As with tests, one of the major lessons learned was that non-determinism in the build process can end up costing your developers a lot of time. Packagers were notoriously bad at declaring all their dependencies manually. They would often forgot to declare dependencies, but the build would pass anyway. One of the builds bitbake ran in parallel added the dependency to the build sysroot shared among these parallel builds. This worked until a commit changed the order of parallel builds, the dependency vanished, and the build failed due to the missing dependency.

This particular problem is now fixed in recent releases, as recipe-specifc sysroots replaced the shared build sysroot. Undeclared dependencies are just no longer present in a component’s build environment. This problem was so widespread that I developed an initial implementation of the solution and invited upstream to weigh in on it.

“The build is so slow”

Build performance was another big area of concern. One of my former bosses has published numbers on the scale of our system and continuous integration setup at a meeting of the GENIVI alliance in 2018: we compiled 3568 components—most of them open source—and bundled them into 30 filesystem images for four hardware variants. The 1808 virtual cores and five TB of RAM split into 119 virtual machines built around 800 system builds per week, up to 25 of which became release candidates. For each build, we ran 2426 tests, 1661 of them on hardware, the rest virtualized.

We did it!

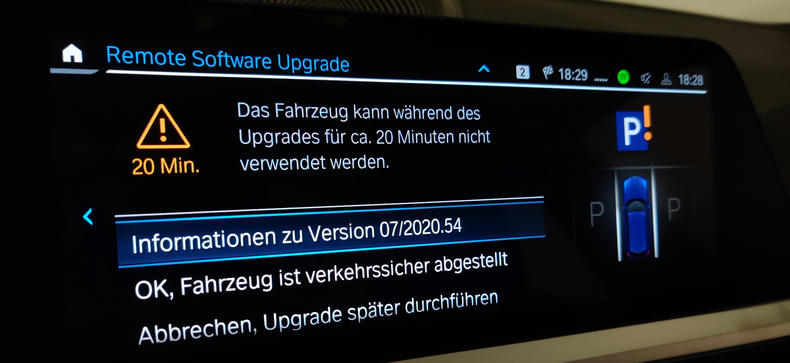

Despite the challenges and bugs we encountered, and stressful final weeks, I’m proud to say that in 2018, we delivered a working product that does not have to hide when compared to the competition. It shipped with privilege separation, secure boot, a good customer experience, and the second over-the-air software update for the whole car after Tesla. It was an honor to be part of this journey.

In future posts in this series, I’m planning to cover various topics, such as:

- ABI compatibility and how the lack of it can ruin your development process

- Open source license compliance, convincing the lawyers, and some sneaky behind-the-scenes PR work for which I probably would have gotten in trouble

- Simplifying cross-compilation for developers using Linux namespaces

- Over-the-air software updates and their pitfalls, and why retrying things until they succeed is sometimes a good approach

- My journey into the security team

If you’re interested, watch this space or follow me on Mastodon.